Conversational User Interface

Spring 2019

Virtual Agent

Speculative Design

CUI

Speculation

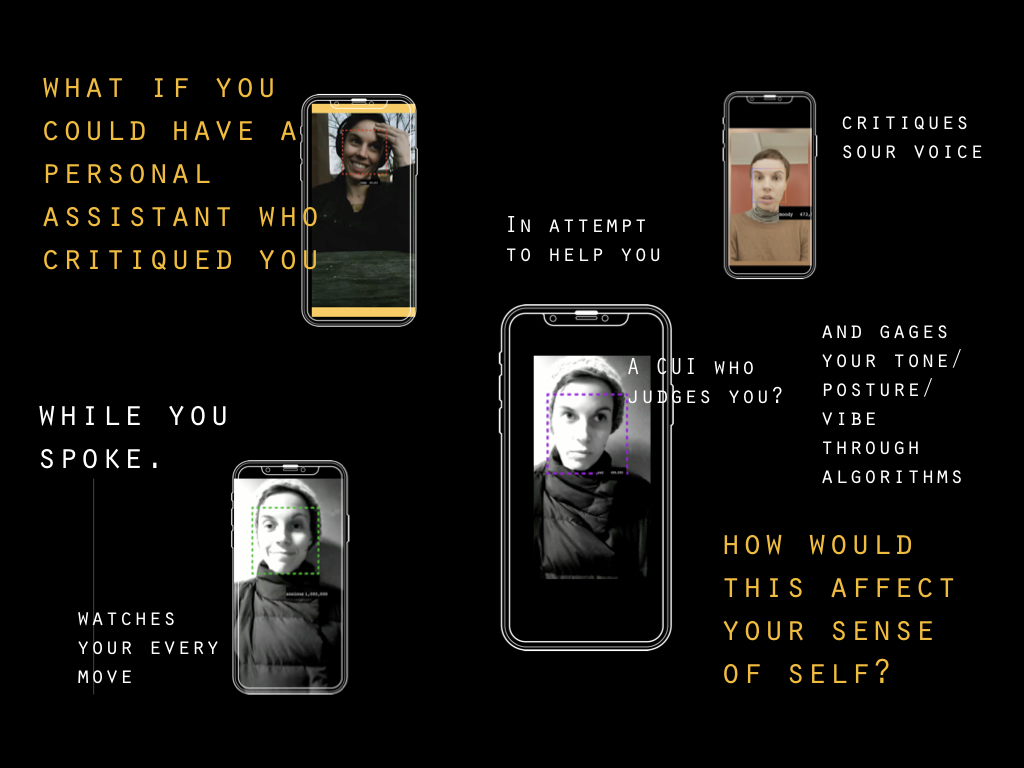

When thinking about Conversational User Interfaces, I went down this research rabbit hole of downloading “self help” apps with therapy bots. I had several conversations with a few of them and found, in the end, that I left without any deeper reflective insights of myself. So my question is this: How can a virtual agent provide an interesting experience that would allow the human interactor to reflect upon themselves, in an unusual and insightful way?

What are some moments in our real life when these flashes of insight occur—When we find ourself thinking “huh? Am I really that self absorbed, difficult to communicate with, or particularly pleasant?” These moments when someone challenges your sense of self. It is healthy for the ego to be in check time and time again. Can a Virtual agent serve as an ego checker?

I looked into therapy techniques that help reflect back to the client their sense of self—either to challenge it or to help build up an identity from zero. Some include having the client stand in front of a mirror while working through their perceptive issues. Other techniques take the form of mimicking or mirroring the clients behavior—the therapist will reflect back a particular posture or energy the client is giving to them, so they can in turn see themselves in the therapist. Other ways of gaining alternative perceptions of self could be to directly ask a trusted friend what they truly think of you, or in more in more emboldened circumstances, taking mind altering drugs.

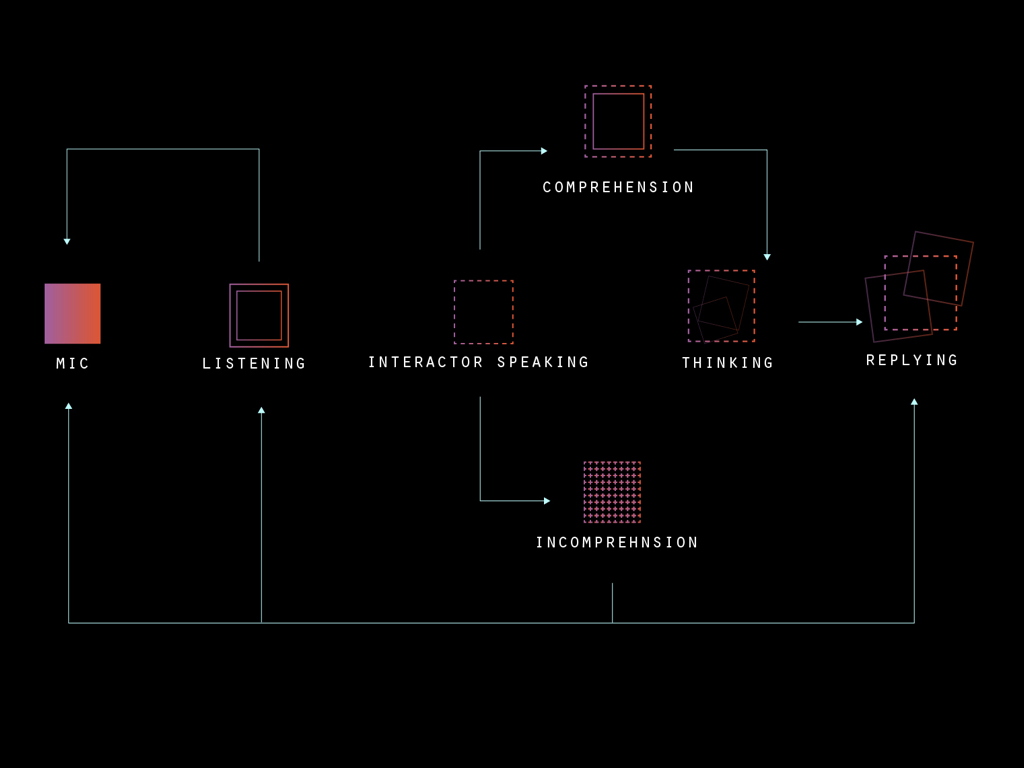

Then I started thinking how AI bots fall short. They are trying to meet the human interactor as if they are human themselves and have the capacity for real empathy or a similar knowledge bank of lived experiences humans have. And, they just don’t. They do, however, have the ability to provide insight in a acutely detailed algorithmic way that humans do not. Which begs the question:

What does it feel to be mirrored by an AI bot that generates a sense of self through a programmed, algorithmic language?

Instead of a lived experience similar to humans, a bot can assess emotions, feelings, “essences” of a person through facial, tonal, and verbal “readings” based off of programmed ways to assess these emotions. If your mouth is curved in a particular direction, facing towards your chin, you might be a certain percentage of “sad.” If your eyes light up when you talk about your mother and your pupils dilate, your assessment will bridge towards a certain amount of “happiness.”

Self Help CUI

Virtual Agent

Speculative Design

CUI

Speculation

When thinking about Conversational User Interfaces, I went down this research rabbit hole of downloading “self help” apps with therapy bots. I had several conversations with a few of them and found, in the end, that I left without any deeper reflective insights of myself. So my question is this: How can a virtual agent provide an interesting experience that would allow the human interactor to reflect upon themselves, in an unusual and insightful way?

What are some moments in our real life when these flashes of insight occur—When we find ourself thinking “huh? Am I really that self absorbed, difficult to communicate with, or particularly pleasant?” These moments when someone challenges your sense of self. It is healthy for the ego to be in check time and time again. Can a Virtual agent serve as an ego checker?

I looked into therapy techniques that help reflect back to the client their sense of self—either to challenge it or to help build up an identity from zero. Some include having the client stand in front of a mirror while working through their perceptive issues. Other techniques take the form of mimicking or mirroring the clients behavior—the therapist will reflect back a particular posture or energy the client is giving to them, so they can in turn see themselves in the therapist. Other ways of gaining alternative perceptions of self could be to directly ask a trusted friend what they truly think of you, or in more in more emboldened circumstances, taking mind altering drugs.

Then I started thinking how AI bots fall short. They are trying to meet the human interactor as if they are human themselves and have the capacity for real empathy or a similar knowledge bank of lived experiences humans have. And, they just don’t. They do, however, have the ability to provide insight in a acutely detailed algorithmic way that humans do not. Which begs the question:

What does it feel to be mirrored by an AI bot that generates a sense of self through a programmed, algorithmic language?

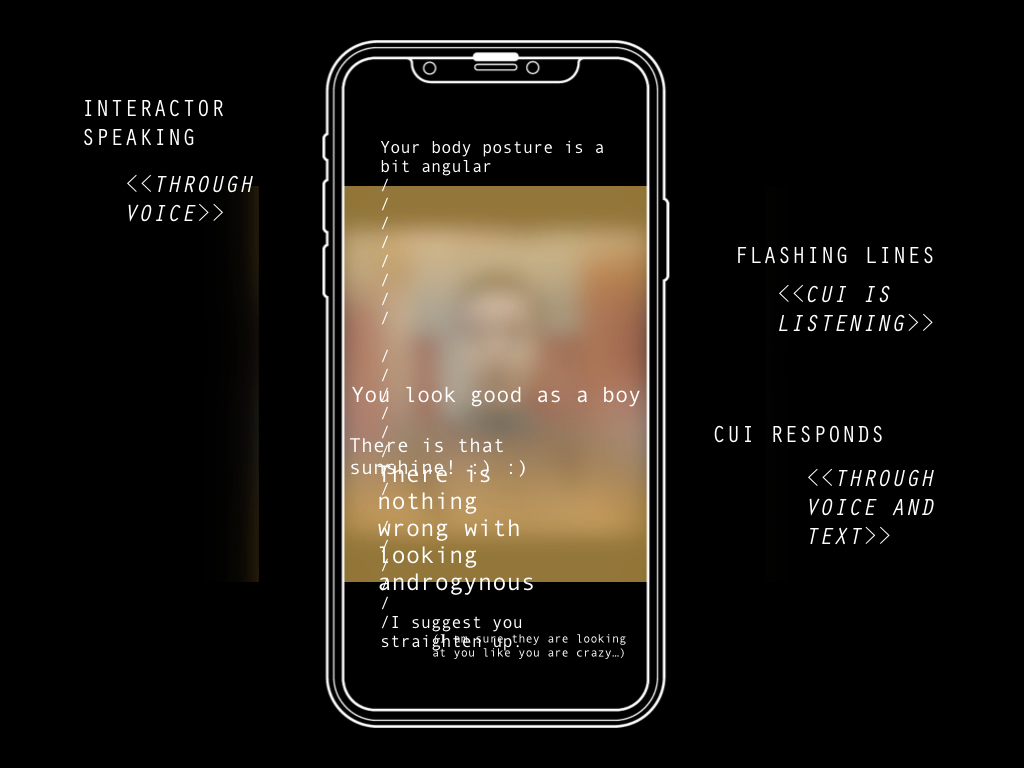

Instead of a lived experience similar to humans, a bot can assess emotions, feelings, “essences” of a person through facial, tonal, and verbal “readings” based off of programmed ways to assess these emotions. If your mouth is curved in a particular direction, facing towards your chin, you might be a certain percentage of “sad.” If your eyes light up when you talk about your mother and your pupils dilate, your assessment will bridge towards a certain amount of “happiness.”

Wider Implication

When would this type of judgement be useful? Through intentional interactions where you speak freely and the bot “corrects” your behavior? Or is it on all the time in the background, and interrupts your routine with these same suggestions: “Smile! Straighten your posture! You look tired…” What happens when you collect these movements of reflection into one large catalogue. What kind of insights can you gain about yourself? Would you be personally offended by the bot or emotionally affected? Could it provide a call to action to truly change?